This is the second post of a series, on Azure App Service Architecture. The first one introduced the concepts of App and Plan, what are the Pricing Tiers and Instance Sizes. This one will give some more details on how I understand the service plan are architected internally.After working with Azure webapps and looking for more detailed information of how they are implemented in Azure, this is my understanding of how Azure App Services solution is architected internally.

Note: This article is based solely on information gathered from publicly available sources, mainly Microsoft Azure documentation site and Github, wrapped with my own understanding and conclusions.

Let’s have a look at what an App Service Plan is actually made of.

Compute

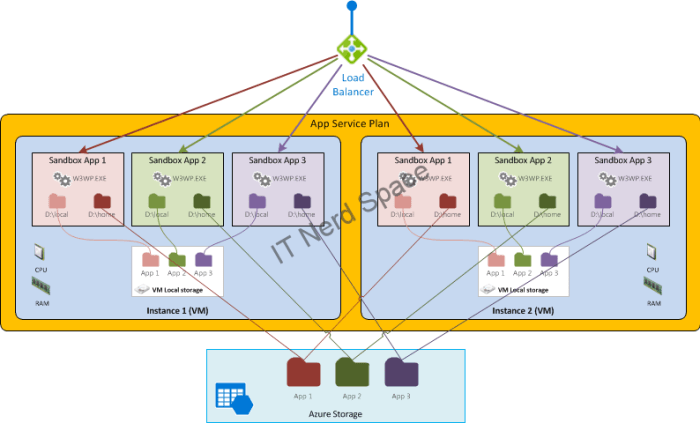

We’ve seen in the first post that the App Service Plan is formed of one or more VMs instances (which can be dedicated or shared depending on the Service Tier). Also we’ve seen you can deploy multiple apps to the same Plan. The apps will then all run on all the instances of the Plan.

Inside each VM Instance of the Plan, you Apps are deployed in Sandboxes:

- Sandbox mechanism

Azure App Services run in a secure environment called a sandbox. Each app runs inside its own sandbox, isolating its execution from other instances on the same machine as well as providing an additional degree of security and privacy.

The sandbox mechanism mitigates the risk of service disruption due to resource contention and depletion in two ways: it ensures that each app receives a minimum guarantee of resources and quality-of-service, and conversely enforces limits so that an app can not disrupt other concurrently-executing apps on the same machine.

Storage

From a storage perspective, and especially when it comes to scale-out (horizontally), it comes handy to understand what are the storage capabilities available to an App deployed in an App Service Plan. There are two kinds: Temporary storage, and Persisted storage.

- Temporary files

Whithin the context of the Application deployed in the WebApp, a number of common Windows locations are using temporary storage on the local machine. For instance:

%APPDATA% points to something like D:\local\AppData.

%TMP% goes to D:\local\Temp.

Unlike Persisted files, these files are not shared among site instances. Also, you cannot rely on them staying there. For instance, if you stop a site and restart it, you’ll find that all of these folders get reset to their original state.

- Persisted files

Every Azure Web App has a home directory stored/backed by Azure Storage. This network share is where applications store their content. The sandbox implements a dynamic symbolic link in kernel mode which maps d:\home to the customer home directory.

These files are shared between all instances of your site (when you scale it up to multiple instances). Internally, the way this works is that they are stored in Azure Storage instead of living on the local file system. They are rooted in d:\home, which can also be found using the %HOME% environment variable.

Now if we put everything together, let’s have a look at how it looks. First let’s start with a single App deployed on a Service Plan with a single VM Instance:

Now let’s see how it looks when we scale-out the plan to two VM instances. We can see how each instance of the App will have a separate Temporary storage in each VM, while they share the Persisted storage (where the App files are deployed).

How does it look if we now deploy multiple Apps to this same App Service Plan?

Notice how within its sandbox, every App in a same VM will keep seeing it’s persisted storage as D:\home, and the Temporary storage as D:\local. That’s quite nice!

Console Access

From Azure Portal you can access the App’s console: the Kudu tools give access to the Web site app at the sandbox level. From there you can access D:\local and D:\home. The hostname command shows the hostname of the VM (ie. the instance where the console is being connected to).